By Mike May, PhD

In a typical bioprocessing run, the engineered cells produce a drug—but that’s not all. They also express and release host cell proteins (HCPs). Depending on the specific HCP and its concentration, it can affect the product’s efficacy or, more likely, safety. An HCP might trigger various unwanted processes in a patient, including an extreme immune response or an allergic reaction. A mild HCP-driven response might just be a nuisance to a patient, but an extreme reaction can be deadly. Consequently, biomanufacturers strive to minimize the HCPs in a product. But minimizing HCPs is difficult. Even identifying all the HCPs in a product is difficult.

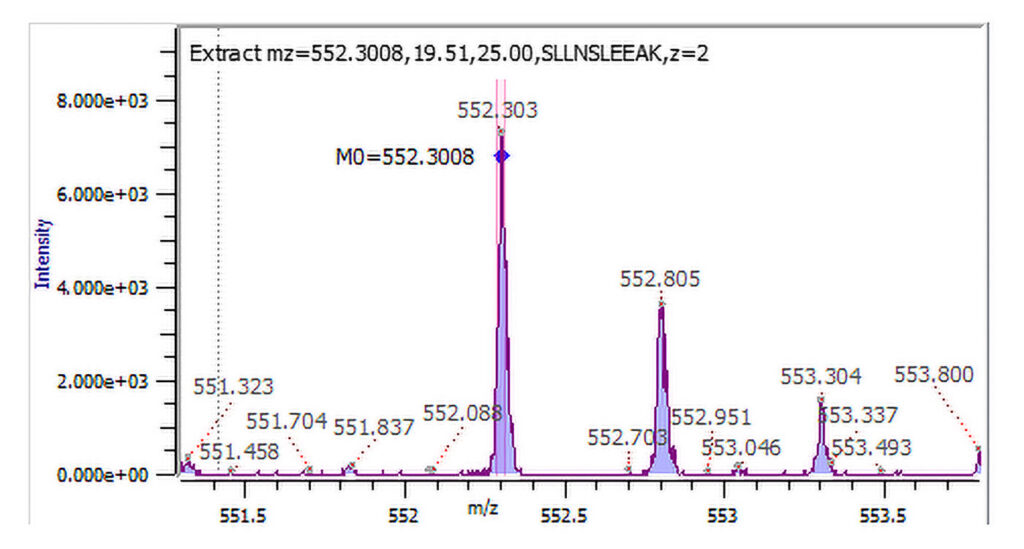

Analyzing a biotherapeutic product for HCPs can require a range of techniques, from basic biochemical methods, such as ELISAs, to advanced combinations of liquid chromatography (LC) and mass spectrometry (MS). Plus, the analysis should start as soon as a company begins developing a new biotherapeutic—hopefully resolving any HCP-related issues before the company makes a gigantic investment in a product that might fail in risk-analysis studies.

Further complicating matters, a bioprocess must be optimized from cell selection through product manufacturing to keep HCPs at extremely low levels. “The general consensus has gotten into the parts-per-million levels,” says Kevin Van Cott, PhD, associate professor of chemical and biomolecular engineering at the University of Nebraska–Lincoln. “It really depends on the product, the route of administration, and the patient.”

For some HCPs in certain patient populations, levels in the hundreds of parts per million can be acceptable. For other HCPs, levels must be kept to single-digit parts per million. Some HCPs may even need to be at undetectable levels. “If you look in the literature, the number that’s most often quoted is 100 parts per million,” Van Cott points out, “but that’s just a number because there are no guidelines set by the regulatory agencies, which makes 100 parts per million just kind of a vague target.”

Ideally, biomanufacturers want to make a product that contains no measurable HCPs. “You want to get it as pure as possible,” Van Cott insists.

Across-the-board analysis

Van Cott and his colleagues often work with startup companies that lack the MS equipment for HCP analysis. That work often involves limiting HCPs in process development. “We also work with products in later stage development—as well as with commercially licensed products that all of a sudden have an HCP problem,” Van Cott says. “You need to determine if known HCPs are becoming more abundant in the product, or if new HCPs are showing up, and you need to see where the HCPs are coming from in the process.”

If a company comes to Van Cott with a good drug substance, he doesn’t expect to see a wide variety of HCPs, but the range depends on the class of the product. “Most people are pretty good at purifying monoclonal antibodies right now,” he observes. “If you’re entering clinical trials with a monoclonal antibody, your host cell protein levels should be down to around 10 parts per million or less for total HCPs.” He adds that for other product classes, difficulties in purification can lead to HCPs in the hundreds of parts per million range.

Much of the challenge of analyzing HCPs arises from the available tools. “We’re using equipment and software for data analysis in an off-label scenario,” Van Cott explains. “They were not specifically designed for HCP analysis.” So, experiments must be designed to exploit the strengths of the equipment and software and minimize their weaknesses.

“We’re going after the very-low-abundance proteins in a sample, and there’s a lot of competition from the product protein,” Van Cott says. So, methods and data analysis must be carefully designed to ensure that the product protein does not dominate the experiment. In some cases, results from HCP studies must be examined one protein at a time.

Where possible, the HCP data go into a software search engine that identifies the proteins, but that is far from foolproof. “I’ve worked with search engines that generate up to 50% false positives for host cell proteins,” Van Cott relates. “No piece of software is perfect. In HCP work, a false positive is just as bad as a false negative—both of those could be catastrophic to a product, determining if it makes it through clinical trials or not.”

Van Cott hopes to improve the software for HCP analysis. As part of the HCP analysis process, he is working with Protein Metrics, which makes what he considers “the best search engine software for wholesale protein analysis.” He indicates that he is working with the company “to see if we can make it even better—a little bit more reliable.”

Algorithms for immunogenic risk

Other vendors offer software-based analysis of HCPs. At EpiVax, programmers developed a toolkit that comprises several integrated algorithms for immunogenicity screening of HCPs, known as ISPRI-HCP (Interactive Screening and Protein Reengineering Interface for Host Cell Proteins). The web-based software relies on well-established algorithms used by many small and large biopharma companies for conducting risk assessments and for screening and reengineering biologic candidates.

EpiVax has demonstrated that its software can be used to survey the Chinese hamster ovary (CHO) HCP immunogenicity landscape. “We took a large dataset of commonly found CHO cell protein impurities and performed an in silico immunogenicity risk assessment,” reports Kirk Haltaufderhyde, PhD, biomedical scientist, EpiVax. “We found that the CHO HCPs covered a wide range of immune responses, and our results correlated well with known immunogenic CHO proteins.” So, comparing the ISPRI-HCP study of CHO proteins to clinical reports demonstrated that EpiVax is on the right track. “It gave us evidence that the application of our algorithms for HCP assessments are working well,” Haltaufderhyde remarks. “We’re continuing to collect experimental data to advance the technology.”

Although the EpiVax algorithm is proprietary, Haltaufderhyde provides some insight into the process. For one thing, the software generates two scores: an EpiMatrix Score and a JanusMatrix Score. The EpiMatrix Score measures the T-cell epitope content of an HCP and the probability of each epitope binding to a T cell, which could trigger an unwanted immune response. “That score will provide insight into how immunogenic that particular protein could be,” Haltaufderhyde explains. In addition, the JanusMatrix Score uses an algorithm that determines how human-like the T-cell epitopes are in an HCP. “The more human-like it is, the more likely that HCP can be tolerated in humans,” Haltaufderhyde says.

Seeking better separations

In addition to companies that are developing software, there are companies that are working to improve the technology behind HCP analysis. And this technology could stand improvement. As Guojie Mao, PhD, principal scientist, R&D, Lonza, says, “The lack of a formal limit for HCPs puts pressure upon manufacturers to accurately test for potential risks and make products that are as clean and as safe as possible.”

At Lonza, Mao oversees the development of assays. The goal, he says, is “to give more accurate and sensitive data readouts, ultimately allowing for improved efficiency in the development of a drug and the clearance of HCPs.”

At the BioPharmaceutical Emerging Best Practices Association’s HCP conference in May, Lonza described its two-dimensional difference gel electrophoresis (2D DIGE) method. “This is a sensitive and robust approach to separate HCP populations and provide informative comparisons of HCP profile changes during the manufacturing process, and it provides a critical reagent characterization for HCP analysis,” Mao explains. “We have optimized 2D DIGE in three key respects for HCP analysis: first, as an alternative dye to conventional options for HCP labeling; second, as another option for the loading method; and third, as an optimized sample buffer.” As a result, these optimizations enabled a protein labeling efficiency of 94% or above for all HCPs.

Having achieved this desirable result, Lonza is optimistic about making additional HCP advances. “Sustainability is essential,” Mao declares. “We need to ensure that our stock is capable of lasting for at least 10 years to reduce the need for reproduction (and the associated impacts) and to reduce wastage.”

In addition, Lonza is considering automation. “Currently, HCP development processes are very labor consuming, but automation offers an opportunity to automate certain steps in the creation and development of assays,” Mao says. “We’re currently shortlisting several such technologies for use in HCP testing across Lonza.”

Overall, Lonza hopes to bring more teamwork to HCP analysis. “There is an important role for industry collaboration and knowledge sharing,” Mao relates. “Globally, there aren’t many people working on HCP analysis, and moving forward, collaboration will be essential.”

Lives are on the line

Van Cott characterizes HCP analysis as follows: “A lot of times, it’s like being a detective: Companies come to you with problems, and you get to use these really incredible tools to help them figure out and fix those problems.” Nonetheless, being Sherlock Holmes can be stressful. “Big, important decisions are being made on the basis of your data and results, and you learn very quickly to take it very seriously,” Van Cott says. “You can’t just be willy-nilly, flip through the data, and just hope that things work out.”

Beyond the economic success of a biotherapeutic protein, even more is on the line. “You have to be extremely careful and aware of what you’re doing,” Van Cott emphasizes. “It all comes down to patient safety and efficacy of the products.”