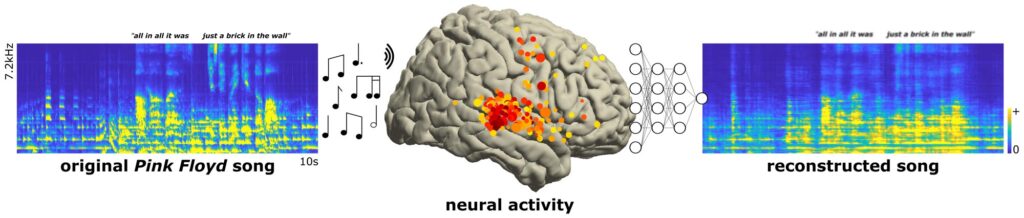

Researchers at the University of California (UC), Berkeley, have demonstrated that recognizable versions of classic Pink Floyd rock music can be reconstructed just from the brain activity that was recorded while patients listened to one of the band’s songs. The study, analyzing data from more than 2,000 electrodes placed directly on the surface of the brains of 29 neurosurgical patients, used nonlinear modeling to decode brain activity and reconstruct the song, “Another Brick in the Wall, Part 1.”

The phrase “All in all it was just a brick in the wall” came through recognizably in the reconstructed song, its rhythms intact, and the words muddy, but decipherable. This is the first time researchers have reconstructed a recognizable song from brain recordings. The encoding models also revealed a new cortical subregion in the temporal lobe that underlies rhythm perception, which could be exploited by future brain-machine interfaces.

The reconstruction shows the feasibility of recording and translating brain waves to capture the musical elements of speech, as well as the syllables. In humans, these musical elements, called prosody—rhythm, stress, accent, and intonation—carry meaning that the words alone do not convey. And because these intracranial electroencephalography (iEEG) recordings can be made only from the surface of the brain—as close as you can get to the auditory centers—no one will be eavesdropping on the songs in anyone’s head anytime soon.

But for people who have trouble communicating, whether because of stroke or paralysis, such recordings from electrodes on the brain surface could help reproduce the musicality of speech that’s missing from today’s robot-like reconstructions. As brain recording techniques improve, it may be possible someday to make such recordings without opening the brain, perhaps using sensitive electrodes attached to the scalp.

“We reconstructed the classic Pink Floyd song “Another Brick in the Wall” from direct human cortical recordings, providing insights into the neural bases of music perception and into future brain decoding applications,” commented postdoctoral fellow Ludovic Bellier, PhD. Bellier is senior author of the team’s published paper in PLOS Biology, which is titled “Music can be reconstructed from human auditory cortex activity using nonlinear decoding models.” The authors suggest that they have added “another brick in the wall of our understanding of music processing in the human brain.”

Music is a universal experience across all ages and cultures and is a core part of our emotional, cognitive, and social lives, the authors wrote. “Understanding the neural substrate supporting music perception, defined here as the processing of musical sounds from acoustics to neural representations to percepts and distinct from music production, is a central goal in auditory neuroscience.”

Past research has shown that computer modeling can be used to decode and reconstruct speech, but a predictive model for music that includes elements such as pitch, melody, harmony, and rhythm, as well as different regions of the brain’s sound-processing network, was lacking. To generate such a model the team at UC Berkeley applied nonlinear decoding to brain activity recorded from 2,668 electrodes, which were placed directly on the cortical surface of the brains (electrocorticography; ECoG) of 29 patients who then listened to the classic rock track.

Currently, scalp EEG can measure brain activity to detect an individual letter from a stream of letters, but the approach takes at least 20 seconds to identify a single letter, making communication effortful and difficult, suggested Robert Knight, MD, a neurologist and UC Berkeley professor of psychology in the Helen Wills Neuroscience Institute, who conducted the study with postdoctoral fellow Bellier. “Noninvasive techniques are just not accurate enough today,” Bellier said. “Let’s hope, for patients, that in the future we could, from just electrodes placed outside on the skull, read activity from deeper regions of the brain with a good signal quality. But we are far from there.”

The brain-machine interfaces used today to help people communicate when they’re unable to speak can decode words, but the sentences produced have a robotic quality akin to how the late Stephen Hawking sounded when he used a speech-generating device.

“Right now, the technology is more like a keyboard for the mind,” Bellier said. “You can’t read your thoughts from a keyboard. You need to push the buttons. And it makes kind of a robotic voice; for sure there’s less of what I call expressive freedom.”

In 2012, Knight, postdoctoral fellow Brian Pasley, and their colleagues were the first to reconstruct the words a person was hearing from recordings of brain activity alone. More recently, other researchers have taken Knight’s work much further. Eddie Chang, a UC San Francisco neurosurgeon and senior co-author on the 2012 paper, has recorded signals from the motor area of the brain associated with jaw, lip, and tongue movements to reconstruct the speech intended by a paralyzed patient, with the words displayed on a computer screen.

That work, reported in 2021, employed artificial intelligence to interpret the brain recordings from a patient trying to vocalize a sentence based on a set of 50 words. While Chang’s technique is proving successful, the newly reported study suggests that recording from the auditory regions of the brain, where all aspects of sound are processed, can capture other aspects of speech that are important in human communication.

“Decoding from the auditory cortices, which are closer to the acoustics of the sounds, as opposed to the motor cortex, which is closer to the movements that are done to generate the acoustics of speech, is super promising,” Bellier added. “It will give a little color to what’s decoded.”

For the new study, Bellier reanalyzed brain recordings obtained in 2012 and 2013 as patients were played an approximately three-minute segment of the Pink Floyd song, which is from the 1979 album The Wall. He hoped to go beyond previous studies, which had tested whether decoding models could identify different musical pieces and genres, to actually reconstruct music phrases through regression-based decoding models. “… we used stimulus reconstruction to investigate the spatiotemporal dynamics underlying music perception,” the authors explained. “Stimulus reconstruction consists in recording the population neural activity elicited by a stimulus and then evaluating how accurately this stimulus can be reconstructed from neural activity through the use of regression-based decoding models.”

The results of the newly reported study showed that brain activity at 347 of the electrodes was specifically related to the music, mostly located in three regions of the brain: the superior temporal gyrus (STG), the sensory-motor cortex (SMC), and the inferior frontal gyrus (IFG). The researchers also confirmed that the right side of the brain is more attuned to music than the left side. “Language is more left brain. Music is more distributed, with a bias toward right,” Knight said. “It wasn’t clear it would be the same with musical stimuli,” Bellier added. “So here we confirm that that’s not just a speech-specific thing, but that it’s more fundamental to the auditory system and the way it processes both speech and music.”

“Specifically, redundant musical information was distributed between STG, SMC, and IFG in the left hemisphere, whereas unique musical information was concentrated in STG in the right hemisphere,” the investigators noted in their paper. “Such spatial distribution is reminiscent of the dual-stream model of speech processing.”

Bellier emphasized that the study, which used artificial intelligence to decode brain activity and then encode a reproduction, did not merely create a black box to synthesize speech. He and his colleagues were also able to pinpoint new areas of the brain involved in detecting rhythm, such as a thrumming guitar, and discovered that some portions of the auditory cortex—in the superior temporal gyrus, located just behind and above the ear—respond at the onset of a voice or a synthesizer, while other areas respond to sustained vocals.

“The anatomo-functional organization reported in this study may have clinical implications for patients with auditory processing disorder,” the authors commented. “For example, the musical perception findings could contribute to development of a general auditory decoder that includes the prosodic elements of speech based on relatively few, well-located electrodes.”

Analysis of song elements revealed a unique region in the STG that represents rhythm, in this case, the guitar rhythm in the music listened to. To find out which regions and which song elements were most important, the team ran the reconstruction analysis after removing the different data and then compared the reconstructions with the real song. Anatomically, they found that reconstructions were most affected when electrodes from the right STG were removed.

Functionally, removing the electrodes related to sound onset or rhythm also caused a degradation of the reconstruction accuracy, indicating their importance in music perception. “Decoding accuracy was also impacted by the functional and anatomical features of the electrodes included in the model,” the scientists wrote. “While removing 167 sustained electrodes did not impact decoding accuracy, removing 43 right rhythmic electrodes reduced decoding accuracy. This is best illustrated by the ability to reconstruct a recognizable song from the data of a single patient, with 61 electrodes located on the right STG.”

These findings could have implications for brain-machine interfaces, such as prosthetic devices that help improve the perception of prosody, the rhythm and melody of speech. “This last result shows the feasibility of this stimulus reconstruction approach in a clinical setting and suggests that future BCI applications should target STG implantation sites in conjunction with functional localization rather than solely relying on a high number of electrodes,” the researchers pointed out.

Knight is embarking on new research to understand the brain circuits that allow some people with aphasia due to stroke or brain damage to communicate by singing when they cannot otherwise find the words to express themselves.

“It’s a wonderful result,” said Knight, commenting on the newly reported study. “One of the things for me about music is it has prosody and emotional content. As this whole field of brain-machine interfaces progresses, this gives you a way to add musicality to future brain implants for people who need it, someone who’s got ALS or some other disabling neurological or developmental disorder compromising speech output. It gives you an ability to decode not only the linguistic content, but some of the prosodic content of speech, some of the affect. I think that’s what we’ve really begun to crack the code on.”

Summarizing their research, the authors commented, “Through an integrative anatomo-functional approach based on both encoding and decoding models, we confirmed a right-hemisphere preference and a primary role of the STG in music perception, evidenced a new STG subregion tuned to musical rhythm, and defined an anterior–posterior STG organization … we found that the STG encodes the song’s acoustics through partially overlapping neural populations tuned to distinct musical elements and delineated a novel STG subregion tuned to musical rhythm.” They further suggest that the anatomo-functional organization reported may have clinical implications for patients with auditory processing disorders. “For example, the musical perception findings could contribute to development of a general auditory decoder that includes the prosodic elements of speech based on relatively few, well-located electrodes.”